The Commerce Department’s National Institute of Standards and Technology (NIST) said that it was soliciting public input for vital testing necessary to ensure the safety of AI systems.

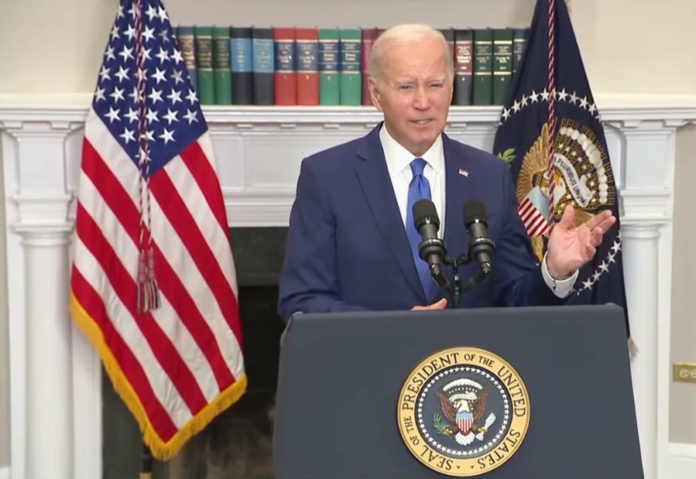

The Biden administration announced the first step toward developing critical guidelines and recommendations for the safe deployment of generative AI (artificial intelligence) on Tuesday, as well as how to test and safeguard systems.

The Commerce Department’s National Institute of Standards and Technology (NIST) announced on Feb. 2 that it was seeking public input for important testing critical to guaranteeing the safety of artificial intelligence systems.

President Joe Biden’s executive order on artificial intelligence, according to Commerce Secretary Gina Raimondo, prompted the effort, which focuses to develop “industry standards around artificial intelligence safety, security, and trust that will enable America to continue leading the world in the responsible development and use of this rapidly evolving technology.”

In recent months, there has been both enthusiasm and concern about generative artificial intelligence, which can generate text, photographs, and videos in response to open-ended cues. This technology has the potential to make some industries obsolete, upend elections, and potentially dominate people, among other things.

The directive issued by Biden urged agencies to establish guidelines for this testing as well as address related chemical, biological, radiological, nuclear, and cybersecurity concerns.

NIST is developing testing recommendations, including where “red-teaming” would be most advantageous for artificial intelligence risk assessment and management, as well as best practices for doing so.

External red-teaming has been employed in cybersecurity for years to detect new dangers, with the name originating from Cold War simulations in which the enemy was referred to as the “red team.”

The first-ever public assessment “red-teaming” event in the United States was held in August during a major cybersecurity conference and was organized by artificial intelligence Village, SeedAI, and Humane Intelligence.

Thousands of people took part in the experiment to see whether they “could make the systems produce undesirable outputs or otherwise fail, with the goal of better understanding the risks that these systems present,” according to the White House.

It “demonstrated how external red-teaming can be an effective tool to identify novel AI risks,” according to a report.

Also read: Quantum AI: Bridging the Gap Between Computing and AI

Do Follow: CIO News LinkedIn Account | CIO News Facebook | CIO News Youtube | CIO News Twitter

About us:

CIO News, a proprietary of Mercadeo, produces award-winning content and resources for IT leaders across any industry through print articles and recorded video interviews on topics in the technology sector such as Digital Transformation, Artificial Intelligence (AI), Machine Learning (ML), Cloud, Robotics, Cyber-security, Data, Analytics, SOC, SASE, among other technology topics.